Research Approach

Trustworthy Evaluation of Generative AI for Security Applications

Research Motivation and Scope

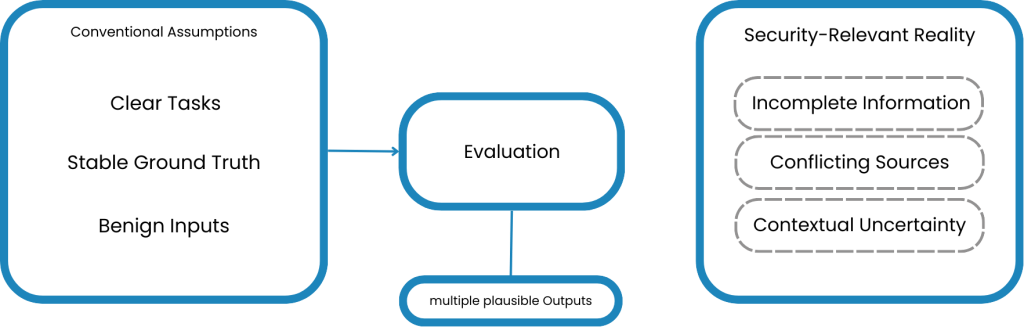

GenSeC focuses on the evaluation of generative foundation models in security-relevant operational contexts. In these settings, many assumptions that underlie conventional AI evaluation do not hold.

Security environments are characterized by:

-

underspecified or evolving tasks

-

incomplete, multilingual, or time-critical information

-

unstable or contested ground truth

-

deliberate manipulation and adversarial behavior

GenSeC starts from the premise that evaluation methodologies must explicitly reflect these conditions in order to produce results that are meaningful, actionable, and relevant for real-world use.

Ambiguity and Correctness

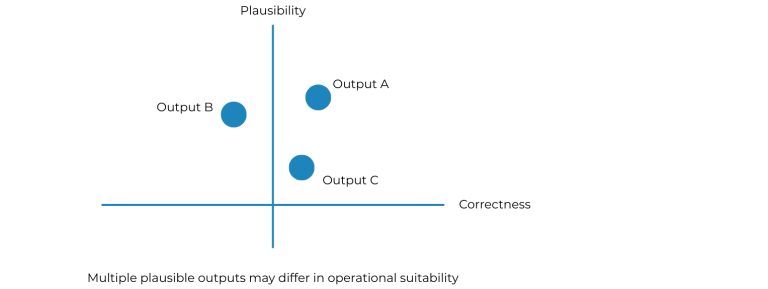

A central research question in GenSeC concerns the relationship between ambiguity and correctness.

In security contexts, generative models may produce:

- multiple outputs that are internally plausible

- yet differ significantly in their operational suitability

The project investigates how models behave under:

- underspecified prompts

- conflicting or heterogeneous information sources

- contextual uncertainty

These analyses directly inform the design of benchmarks and evaluation protocols that go beyond single “correct” outputs.

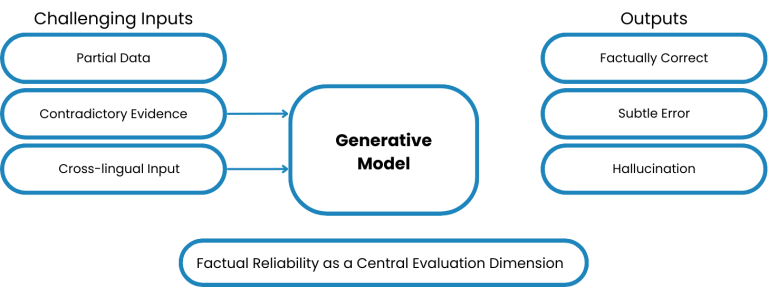

Factual Reliability & Model Behaviour

Another core focus of GenSeC is the systematic assessment of factual reliability.

The project examines:

-

how factual errors and hallucinations arise

-

how they manifest in textual, geospatial, and multimodal outputs

-

how such phenomena can be measured reproducibly

Model behavior is studied under challenging conditions, including:

-

partial data corruption

-

contradictory evidence

-

cross-lingual input

Rather than treating factuality as a secondary quality attribute, GenSeC integrates it as a central evaluation dimension across all use cases. This enables comparative analysis of model robustness and supports the identification of failure modes that are particularly critical in security settings.

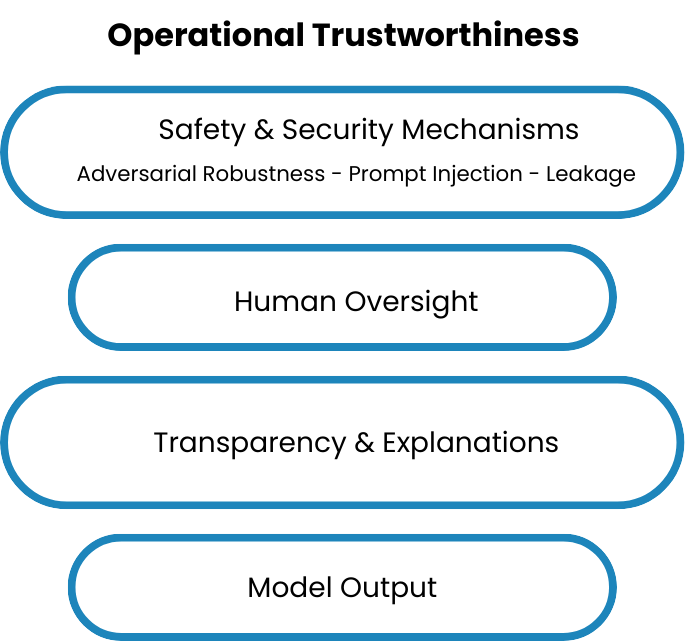

Transparency, Safety & Security

GenSeC approaches transparency as an operational requirement, not merely as a post-hoc explanatory feature.

The project investigates:

whether model outputs can be accompanied by stable and interpretable explanations

how sensitive these explanations are to small changes in input

how transparency supports human oversight and traceability in decision-making processes

In parallel, GenSeC addresses key safety and security challenges, including:

robustness against adversarial prompting

prompt injection attacks

unintended information leakage

By integrating transparency, safety, and security into a unified evaluation framework, GenSeC moves beyond task-level performance toward a comprehensive assessment of model trustworthiness.