Research Approach

Research Motivation and Scope

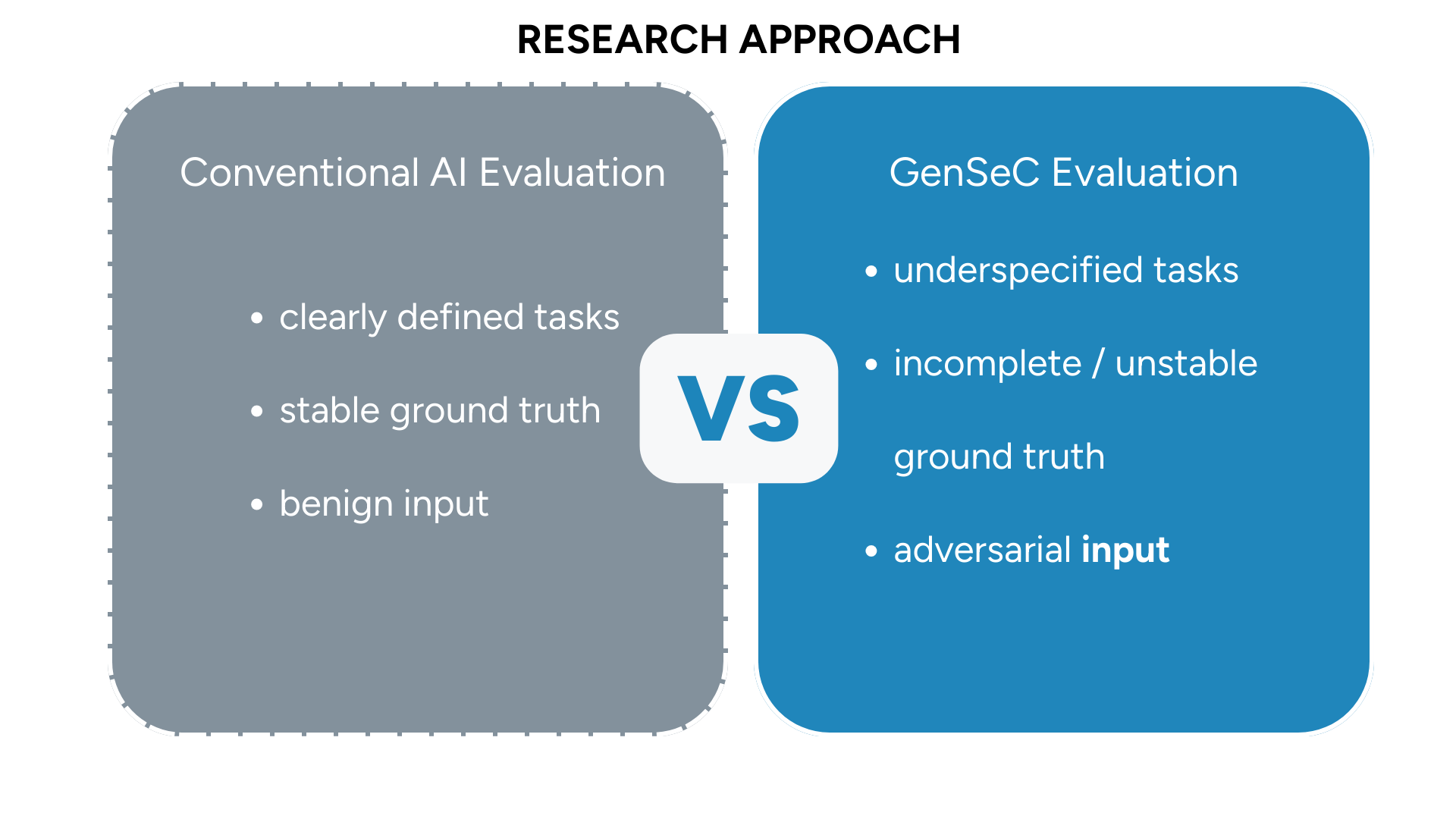

GenSeC investigates how generative foundation models can be evaluated in security-relevant operational contexts, where standard assumptions about clear tasks, stable ground truth, and benign inputs do not apply. Instead, such environments are often characterized by incomplete, multilingual, time-critical, and potentially manipulated information. GenSeC is based on the premise that evaluation methodologies must explicitly reflect these conditions to be meaningful.

A central focus of the project is the relationship between ambiguity and correctness. GenSeC studies how models behave when multiple outputs appear plausible but differ in their operational suitability, and how this affects benchmark design and evaluation practices.

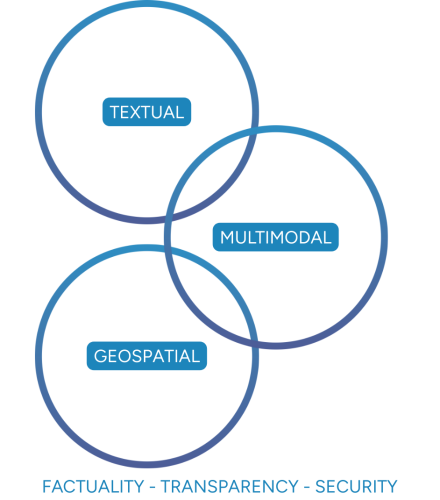

Across all use cases, GenSeC places particular emphasis on factual reliability, transparency, and security. The project systematically examines factual errors and hallucinations, the stability and interpretability of model explanations, and robustness against adversarial behavior. By integrating these dimensions into a unified evaluation framework, GenSeC aims to advance the assessment of model trustworthiness beyond task-level performance.

Use Cases

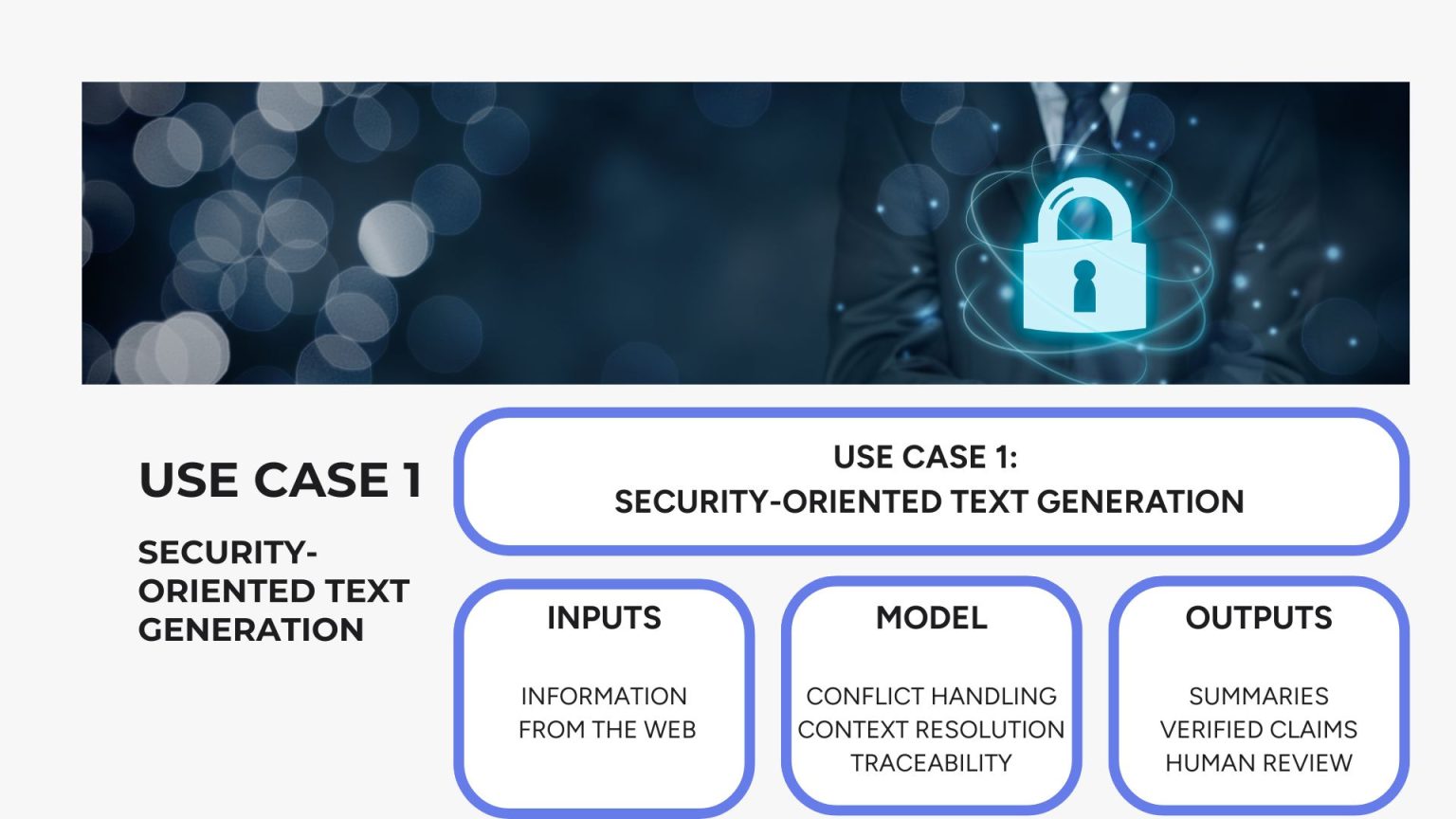

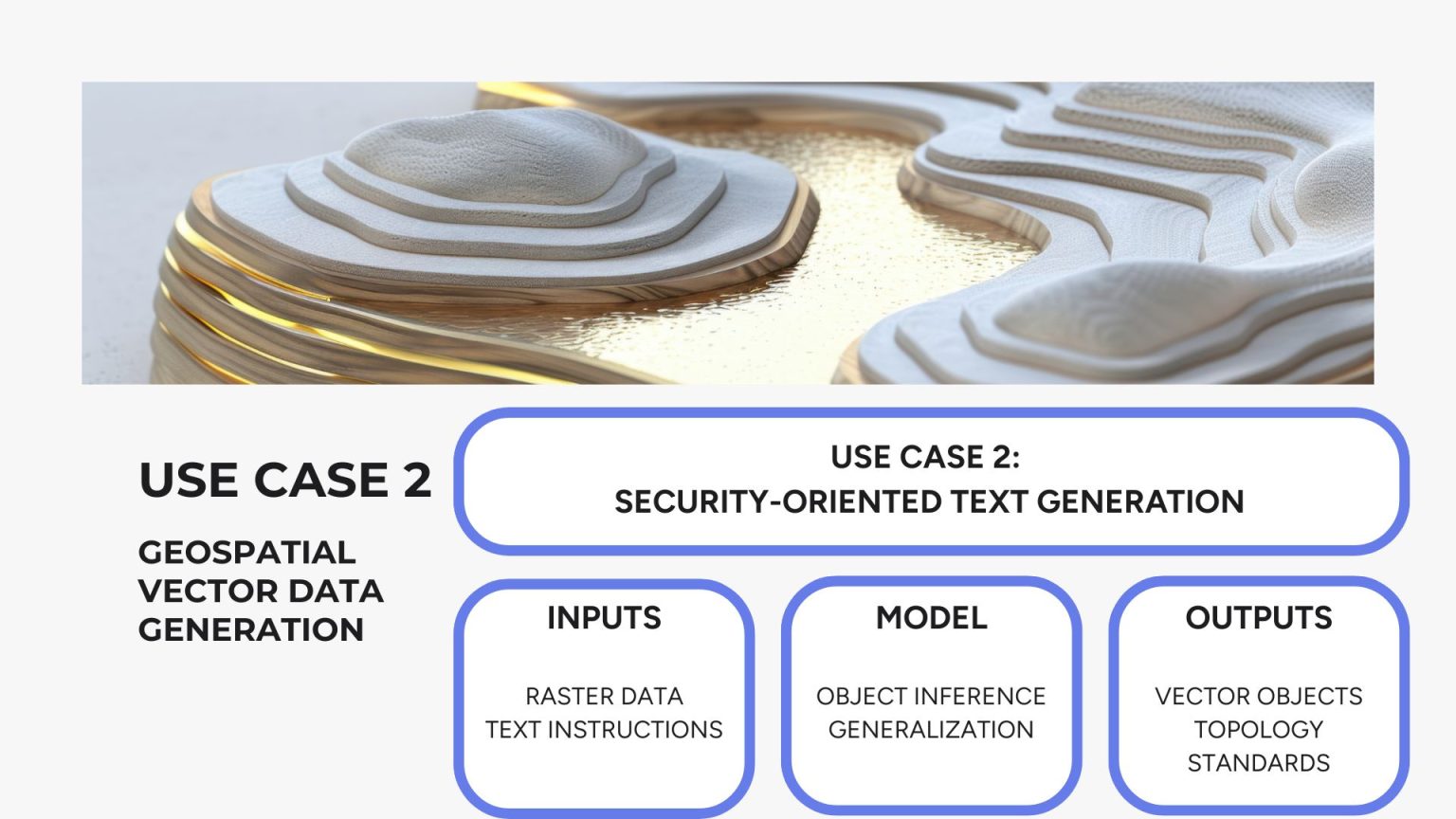

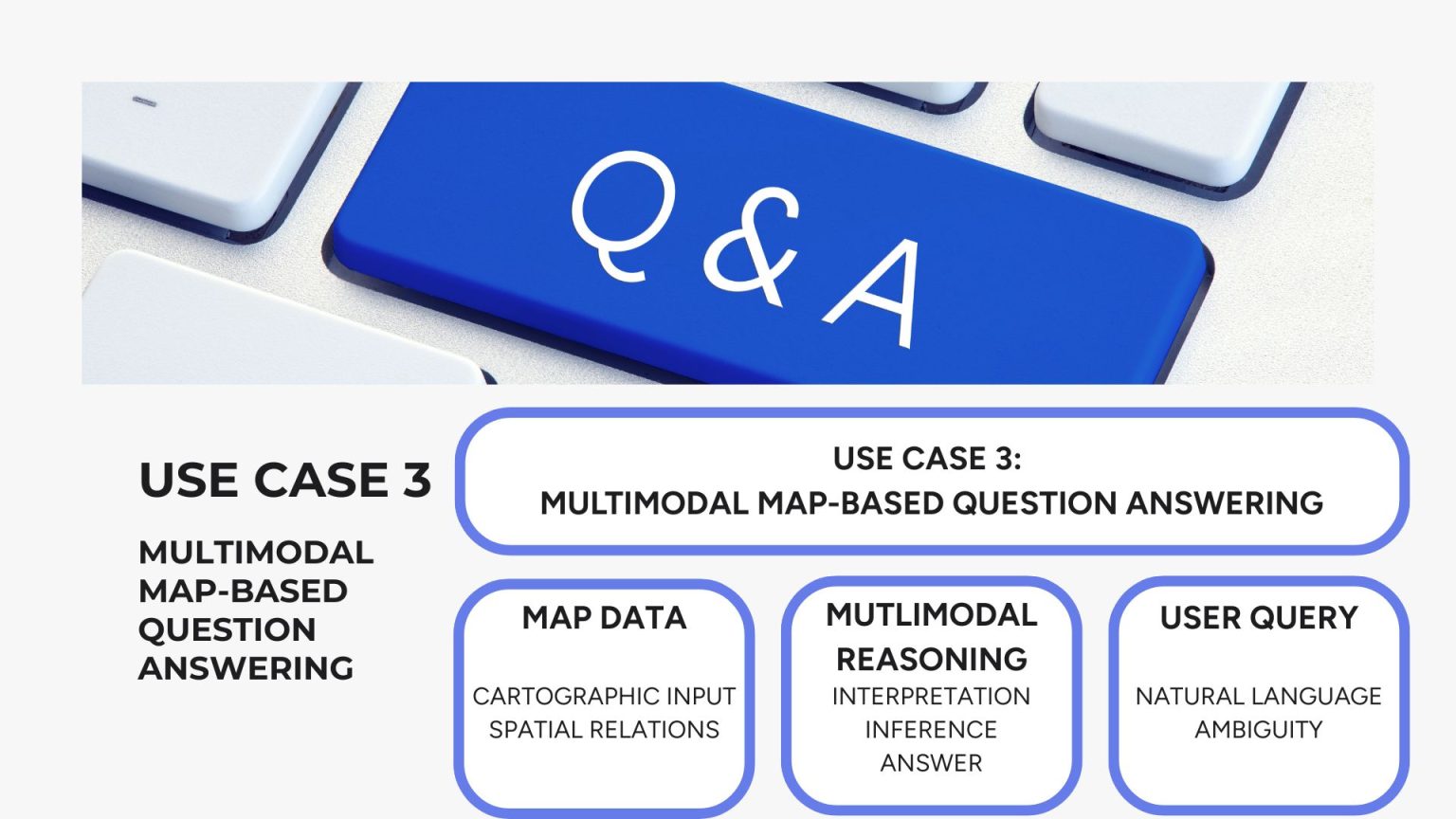

The use cases in GenSeC serve as structured research settings rather than isolated application examples.

They are selected to represent recurring challenges in security related information processing, while covering different data modalities and interaction patterns.

Each use case informs the design of benchmarks, demonstrators, and evaluation criteria, and allows the project to study generative model behavior under realistic operational constraints.

Together, the use cases span textual, geospatial, and multimodal scenarios. This enables comparative analysis across domains and supports the development of evaluation methods that generalize beyond a single task or data type.

Benchmarks

Use Case Specific Benchmarks

GenSeC develops three benchmarks aligned with representative security related applications. Each benchmark is designed to reflect domain specific requirements while adhering to shared quality criteria regarding validity, reproducibility, and comparability:

- The benchmark for security-oriented text generation focuses on summarization and report generation from heterogeneous and multilingual sources. It evaluates coherence, contextual appropriateness, and factual reliability, with additional emphasis on robustness under misleading or contradictory input conditions.

- The geospatial vector data benchmark addresses the generation and generalization of geographic data. It evaluates compliance with established geospatial standards, correctness of object representation, and suitability for downstream analytical tasks. Particular attention is given to completeness, topological consistency, and behavior across different levels of spatial abstraction.

- The multimodal map-based question answering benchmark evaluates spatial reasoning over visual map representations. It examines the interpretation of geographic relationships, handling of vague spatial expressions, and resilience against adversarial queries that aim to distort geospatial understanding.

Holistic Benchmarking Framework

In addition to the use case specific benchmarks, GenSeC develops a holistic benchmarking framework that addresses cross cutting evaluation dimensions. This framework integrates factuality, transparency, and security resilience independent of a single application scenario.

The holistic benchmark supports adversarial stress testing and enables comparative analysis across models and use cases. By combining automated metrics with expert informed evaluation procedures, it addresses known limitations of accuracy centered benchmarking approaches.

As part of the holistic benchmark, we will release an accompanying white paper that analyses the theoretical limits of benchmarking foundation models in security critical contexts. It reviews key challenges for benchmark design, assesses existing benchmarks with respect to data quality, safety, and security risks, and outlines core properties of high quality and holistic benchmarks, including factual reliability, robustness, transparency, and security compliance.

Demonstrators

GenSeC implements three demonstrators corresponding to the defined use cases. These demonstrators are designed as research instruments rather than end user systems. Their purpose is to operationalize the benchmarks and to study the interaction between model adaptation, evaluation results, and system behavior.

text generation demonstrator

geospatial vector data demonstrator

multimodal map-based chatbot demonstrator

Consortium

GenSeC is carried out by a consortium that combines academic research excellence with applied industrial expertise.

The project is led by the German Research Center for Artificial Intelligence (DFKI), which contributes extensive experience in foundational AI research, language technology, multimodal systems, and evaluation methodologies, including work in security related domains.

DFKI researchers involved in the project bring long standing expertise in natural language processing, explainable AI, benchmarking of foundation models, and multimodal reasoning. This ensures a strong methodological foundation and close alignment with current scientific discourse.

The consortium further includes GAF AG, an industry partner with extensive expertise in earth observation, geospatial data processing, and large scale mapping workflows. GAF AG contributes practical knowledge of operational requirements, geospatial standards, and data production pipelines, ensuring that the project remains grounded in real world application constraints.

The collaboration between DFKI and GAF AG enables GenSeC to address both methodological and operational challenges in an integrated manner, bridging the gap between theoretical evaluation frameworks and applied security relevant use cases.