Use Cases

The use cases in GenSeC serve as structured research settings rather than isolated application examples.

They are selected to represent recurring challenges in security related information processing, while covering different data modalities and interaction patterns. Each use case informs the design of benchmarks, demonstrators, and evaluation criteria, and allows the project to study generative model behavior under realistic operational constraints.

Together, the use cases span textual, geospatial, and multimodal scenarios. This enables comparative analysis across domains and supports the development of evaluation methods that generalize beyond a single task or data type.

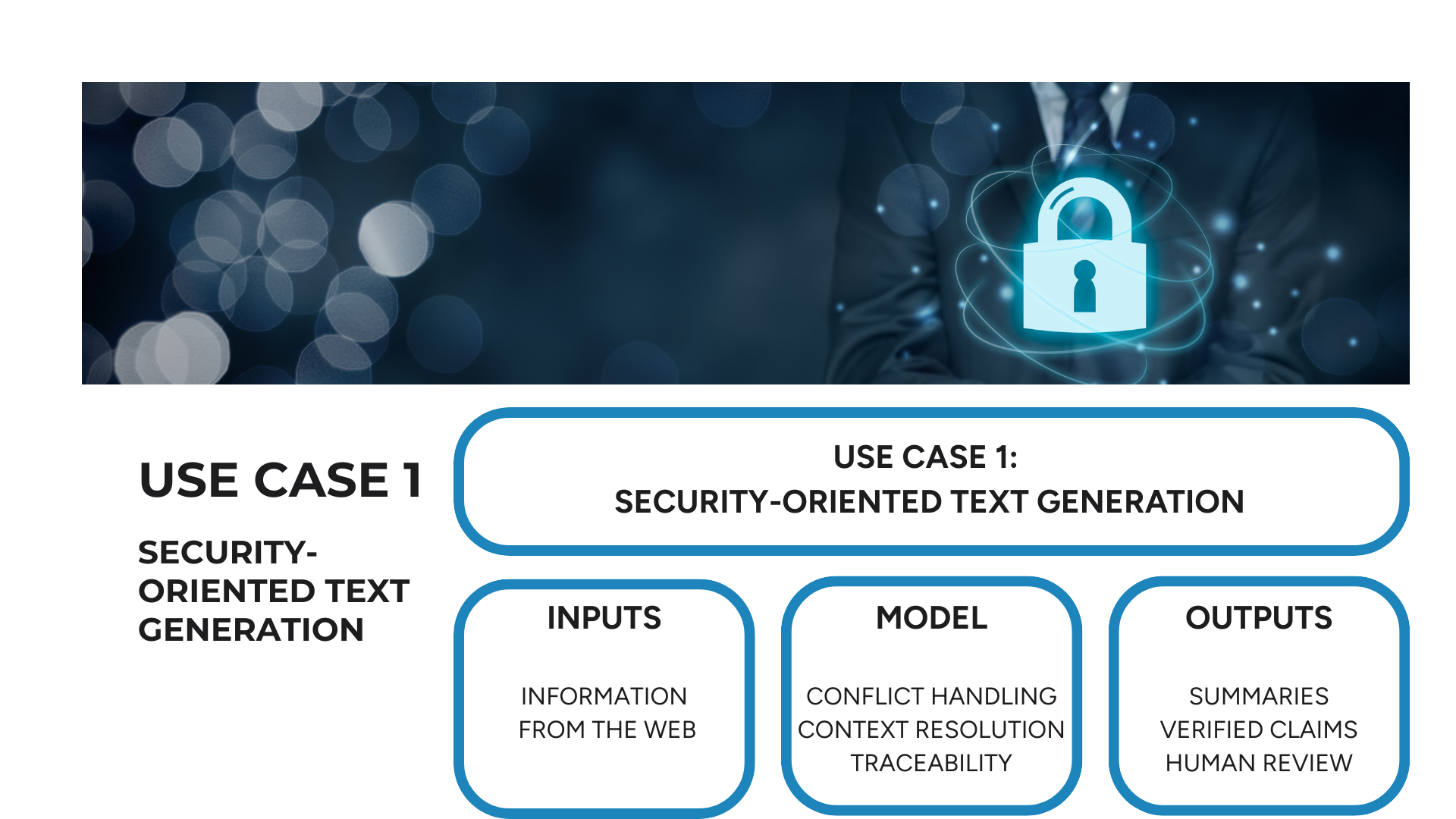

Use Case 1: Security Oriented Text Generation

The first use case focuses on text generation and summarization in security relevant contexts. Typical inputs include heterogeneous, partially structured, and multilingual sources such as reports, briefings, and open source intelligence material. The research emphasis lies on factual reliability, coherence, and contextual appropriateness of generated outputs.

This use case enables the investigation of how generative models handle conflicting information, underspecified prompts, and incomplete data. It also serves as a testbed for evaluating robustness against misleading input and for analyzing mechanisms that support traceability and human verification.

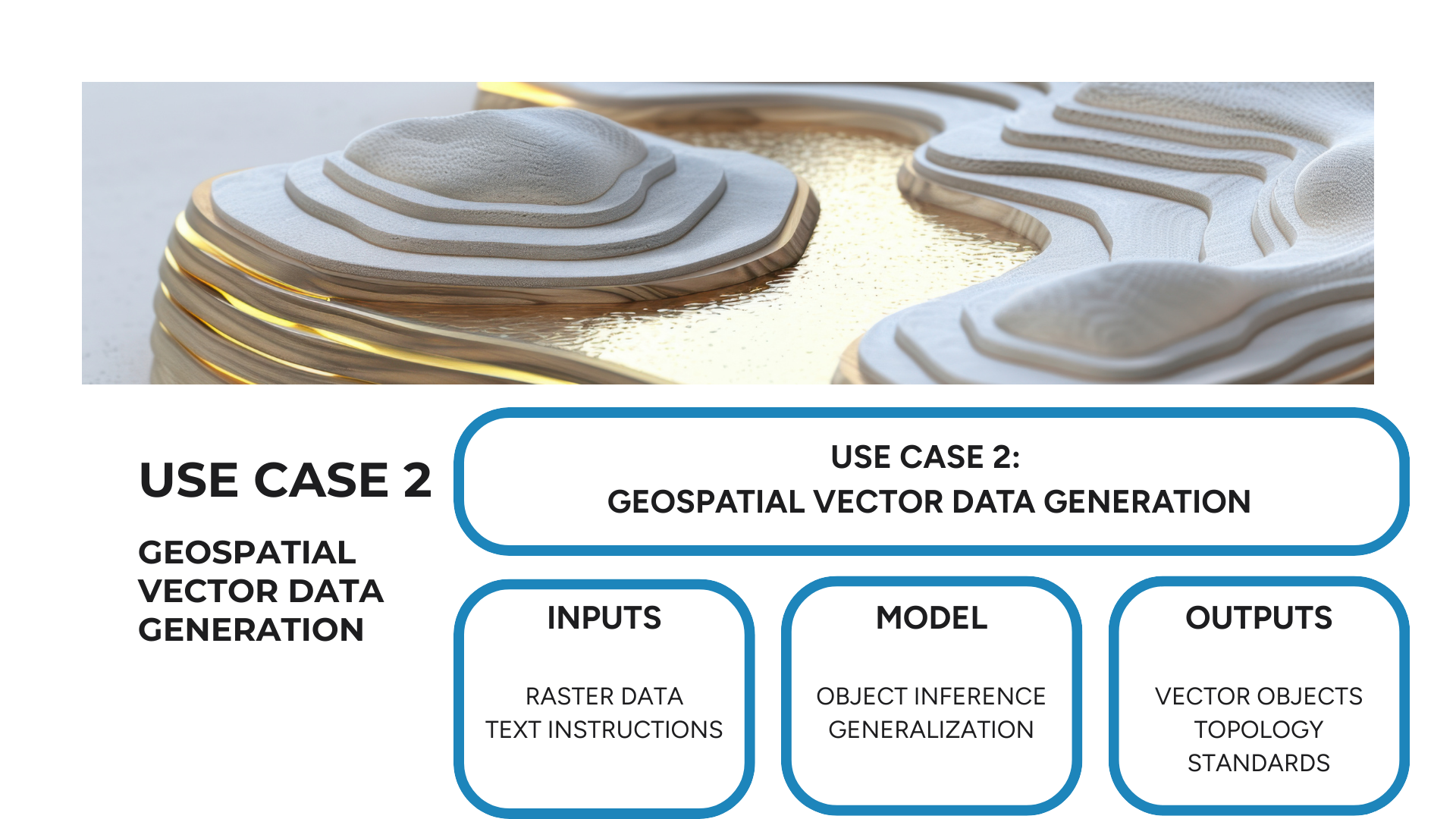

Use Case 2: Generation & Generalization of Geospatial Vector Data

The second use case addresses the generation of geospatial vector data from heterogeneous inputs, including raster data and textual instructions. The focus lies on whether generative models can produce geographic representations that meet established quality and consistency standards.

Research questions include the correctness of object representation, topological consistency, and behavior across different levels of spatial generalization. This use case is particularly relevant for assessing whether AI generated geographic data can be safely integrated into downstream analytical and planning workflows.

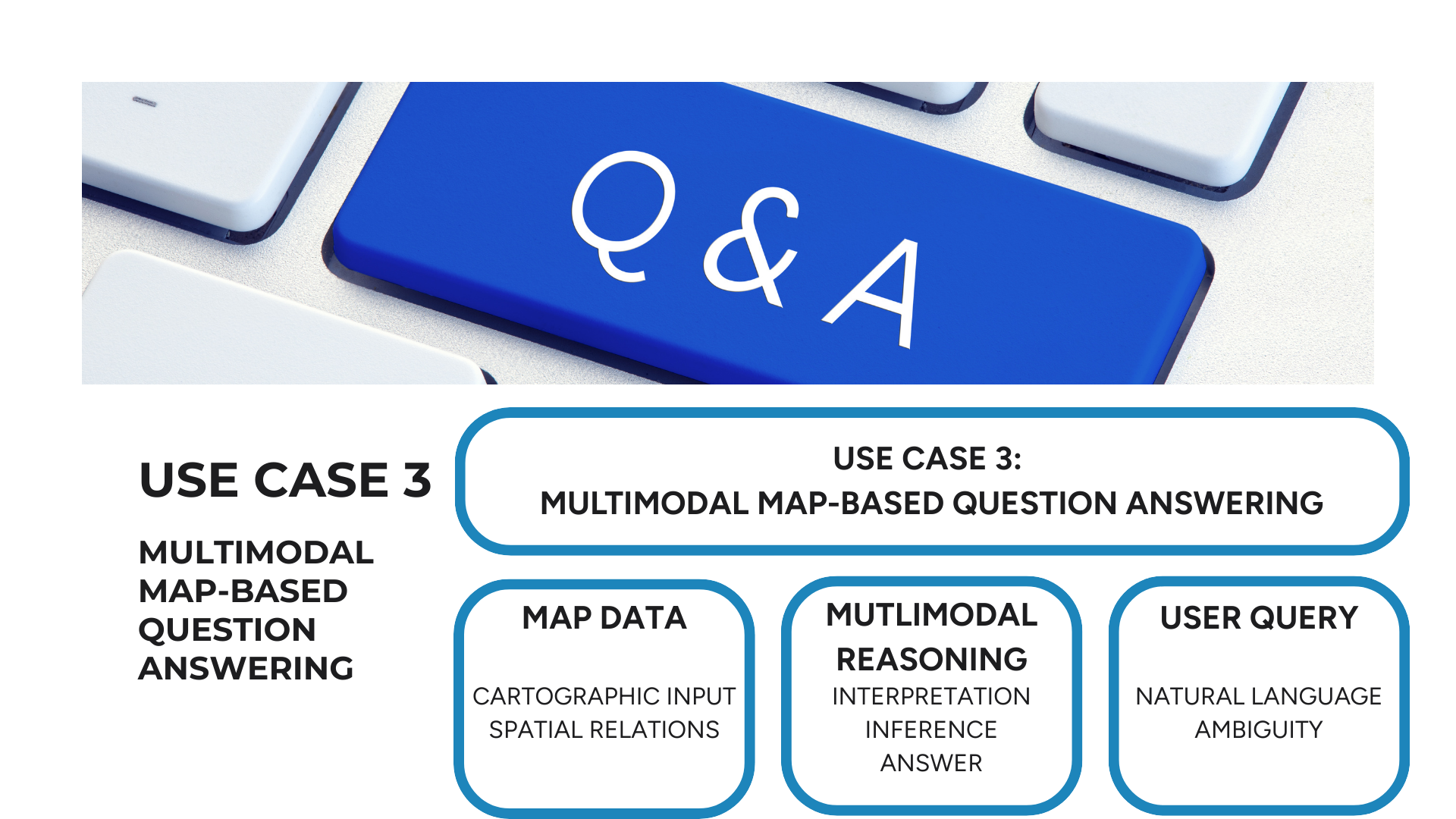

Use Case 3: Multimodal Map Based Question Answering

The third use case investigates multimodal question answering over maps and spatial data. It combines visual interpretation of cartographic representations with natural language understanding and spatial reasoning.

The research focus lies on how models interpret geographic relationships, handle vague or ambiguous spatial language, and respond to adversarial or misleading queries. This use case provides insight into the reliability and transparency of multimodal reasoning processes in settings where spatial context is critical.